Last week, Fero CEO Berk Birand spoke at the Connected Plant Conference on the crucial role explainability plays in industrial machine learning. Here are some takeaways from the conversation:

Trust is digitalization's biggest obstacle.

“I believe the reason ML is not being used more widely in the industrial sector is because we have a trust issue," Birand told attendees.

While data scientists believe heavily in black-box models, operators can be skeptical. Why should they trust machine learning software over their hard-earned expertise?

“It’s up to us to generate and earn that trust.”

To earn trust, a machine learning solution must be explainable.

In other words, operators need to understand the predictions and recommendations they get.

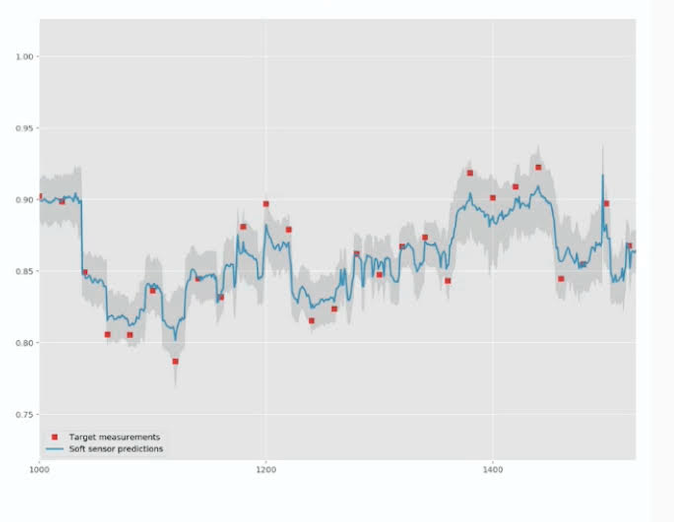

To illustrate explainability in action, Birand shared a use case where Fero predicted viscosity in real time for a large refinery belonging to a major oil and gas producer.

Viscosity was a critical target for the operations team, yet it could only be measured every eight hours. This required the team to use cutter stock to ensure they would meet their specs. They knew this would reduce margins; however, failing to meet specs would be even more costly.

Fero made this process more efficient, minimizing cutter stock use and resulting in over $4 million in savings.

Operators got estimates of real-time viscosity between lab tests, as well as a better understanding of which factors affected viscosity. That meant they knew how much stock to add, and could adjust their parameters during production, without waiting for lab results.

Crucial to note, Birand said, was the shaded area, where the software provided not just a black-box estimate, but a confidence range.

"Sometimes the models are not so confident and other times they are very confident," he said. "The operator can use this information to make better decisions."

You can't trust a black box—and you shouldn't.

In contrast to the above example of an explainable solution in action, Birand shared that many machine learning solutions marketed to manufacturers are black boxes. Operators have no way to know what's inside, any more than one can know why Google's image recognition software might erroneously pin a cat as a potato.

This has many drawbacks. A black-box model isn't built for partial data, nor does it change with time. You can train an image recognition model with a database of cats from the past fifty years, but factory behavior changes dramatically within months. So if you take data from a decade and put it in a model, "it's like you’ve trained it for two different factories."

Even more problematically, conventional ML is not built to prescribe inputs. In the industrial world, you might want to use data from the first stage of your chemicals plant to set certain future parameters, so at the end of line testing you can be sure to meet specs. Most ML methods are not built to answer this question.

Birand shared the example of a Tesla "thinking" it's being attacked by traffic lights, when in fact it's driving behind a truck carrying traffic lights. With all the car's intelligence, it can't tell the difference between a stationary traffic light and one that's moving on the highway.

"This is the problem with black-box models. There’s huge trust in them by data scientists, but not operations teams. And for good reason."